Polar diving can be as exhilarating as it is dangerous, so prospective divers need to do extensive preparation to make the most of the experience.

As a hugely challenging activity, it requires specialised equipment, training and experience to explore the underwater world in these remote regions safely.

Read on to discover the benefits of polar diving and all the essentials required for a safe and unforgettable time. Let’s dive right in.

The Benefits of Polar Diving

It’s safe to say that not everyone in the world will want to plunge into the icy depths of the Arctic or Antarctic sea. But if you’re someone who does, there are several things you can gain from the experience.

Unrivalled wildlife viewing

Polar diving offers some of the best wildlife viewing opportunities on the planet. Species such as orcas, seals, walruses and penguins are commonly seen in these environments and — with a camera or two — divers can take some incredible photos.

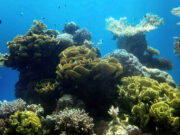

Unique landscapes

The icy and snow-covered landscapes of the polar regions create a unique environment for divers to explore. From towering icebergs to deep fjords, the polar climate offers a variety of stunning and exciting scenery found nowhere else in the world.

Potential health benefits

Many people who practice ice or polar diving say it helps them lose weight, improve their mental health, boost their immune system, heighten circulation and even increase their libido. However, staying safe and avoiding hypothermia has to be the number one priority.

Visibility

The lack of clarity in the water can be challenging, but it also adds a sense of mystery and adventure to each dive. The muffled sounds of the underwater environment add to the surreal atmosphere.

Remote locations

Divers in the polar regions can often explore areas far from civilisation. This makes for a unique experience and allows divers to experience an environment practically untouched by humans.

Equipment Needed for a Successful Expedition

Now you know what you can gain from the experience, investment in the right equipment is critical for polar diving to be safe.

- Drysuit: The suit is a big difference between regular and polar diving. For polar diving, a drysuit — not a wetsuit — is essential. It should be made of a waterproof material like neoprene and well-insulated for warmth and comfort.

- Undergarment: An undergarment should be worn underneath the drysuit to provide an extra layer of insulation, such as a thermal fleece, underwear or a thick jumper. Some high-tech undergarments also have the option of heating built-in.

- Diving Mask: A diving mask provides excellent underwater visibility. The mask should fit well and be comfortable to wear.

- Regulator: A diving regulator controls the pressure of breathing gas for diving. Cold-water regulators are designed to resist freezing, keeping the airflow properly controlled.

- Gloves: Gloves are essential for polar diving to keep hands warm and protect them from the cold. The gloves should fit well and provide enough movement for you to operate your equipment.

- Hood: A hood is vital for polar diving to keep your head warm and comfortable. The hood should fit well and provide enough insulation to keep the diver warm.

There are plenty of other things divers may take with them, from dive watches and computers to monitor the depth to a dive torch and underwater camera for navigation and capturing the beauty of the Arctic beneath the surface.

Things to Know Before Going

Aquatic Conditions & Potential Hazards

The Arctic has an average annual temperature of -12°C, while the Antarctic ranges from -30°C to -70°C throughout the year. With a fairly constant temperature of -1.8°C, the Arctic Ocean is typically very icy — icebergs and sea ice are not uncommon hazards in some areas.

Visibility can also be an issue depending on the location’s ice formations. For divers, there’s always the potential for hypothermia and other cold-related illnesses, which is why the appropriate diving gear and clothing should be worn at all times.

Marine Life Habitats

One of the biggest draws for the polar regions is their fascinating marine life. The Arctic’s marine ecosystem is home to over 5000 animal species. The most common are polar bears, bowheads, beluga and grey whales, narwhals, walruses and various species of seal. In the Antarctic, divers may even be able to snorkel or dive with penguins.

Environmental Regulations & Guidelines

Polar expeditions are strictly regulated for safety and to protect the environment. The Smithsonian Institution is working with the dive industry to champion protecting the polar environment. They emphasise the need for preserving polar biodiversity and habitats, especially for endangered species such as reindeer, Greenland sharks, walrus, beluga, ring seals, harp seals and many others.

Polar diving offers a unique experience and several benefits for adventurous divers. There’s no shortage of things to explore, from stunning landscapes to abundant wildlife. By investing in the right equipment and following the necessary regulations, polar divers can enjoy this incredible environment safely.

About the author

Hannah Wilson is a Marketing Executive at Arctic Kingdom, who offer once-in-a-lifetime Arctic tours with the opportunity to see polar bears, narwhals and even the Northern Lights. Hannah has written extensively on the Arctic, including travel, getaways, sightseeing and polar activities.